An update on AI regulation in Europe

It is indeed paramount to understand that all countries are choosing to use artificial intelligence as a means of demonstrating their desire for technological advancement because of its expected utilities and potential. The regulatory framework of AI is still in its very beginning. This article reviews the various regulatory initiatives regarding artificial intelligence at a European level.

1. A preliminary reflection by the European Commission: communication, guidelines and a White Paper on artificial intelligence

Since 2018, the European Union has been working on an artificial intelligence strategy which is gradually emerging.

The European Union began its reflections on artificial intelligence in the context of the digital single market by publishing a communication,“Artificial Intelligence for Europe”(1), in April 2018. Given the strategic importance of AI from legal, geopolitical, trade and security perspectives, the European Union’s ambition consists of becoming a leading global player in innovation in the data-driven economy and its application. Thus, the European Commission put in place a high-level group of about 50 independent experts in June 2018 in order to define guidelines for achieving Trustworthy AI (2).

These guidelines were published in April 2019 and the members of this group notably recommended the following:

- “Ensure that the development, deployment and use of AI systems meet the seven key requirements for a Trustworthy AI: (1) human action and control, (2) technical robustness and safety, (3) privacy and data governance, (4) transparency, (5) diversity, non-discrimination and fairness, (6) environmental and societal well-being and (7) accountability.

- Consider technical and non-technical methods to ensure the implementation of those requirements.

- Foster research and innovation to contribute to the assessment of AI systems and to support the implementation of these requirements; disseminate results and open questions to the wider public, and ensure that training in AI ethics is systematically provided to the new generation of experts.

- Proactively communicate clear information to stakeholders on AI systems’ capabilities and limitations, enabling realistic expectation settings, as well as to provide information on the implementation requirements. There is a need for transparency about the fact that they are dealing with an AI system.

- Facilitate the traceability and audibility of AI systems, in particular in critical contexts or situations.

- Involve stakeholders throughout the AI systems’ life-cycle. Foster training and education so that all stakeholders are aware of the Trustworthy AI concept and trained within this field.

- Be mindful that there might be fundamental tensions between different principles and requirements. Continuously identify, evaluate, document and communicate these trade-offs and their solutions.”(3)

In February 2020, the European Commission introduced its guidelines on artificial intelligence with the release of a White Paper entitled “On Artificial Intelligence - A European approach to excellence and trust”(4) and was submitted for public consultation until May 2020.

The European Commission recommends a definition for new legal instruments which is “sufficiently flexible to accommodate technical progress while being precise enough to provide the necessary legal certainty”(5). The Commission recalls that consumer protection and data protection rules will continue to apply to artificial intelligence technologies, although this framework may require some adjustments to accommodate digital transformation and the use of AI. As summarised by Cécile Crichton “some of the issues in these technologies generate new risks, which, according to the Commission, relate to the infringement of fundamental rights, on the one hand, and to the security and proper functioning of the liability regime, on the other hand (p.12-15). In order to avoid this, a number of rules will be amended (p.15-16), but above all new measures will be created (p. 21-26). These new measures will only be attributed to “high risk” artificial intelligence applications (p. 20-21). It is specified that high risk is determined according to two cumulative criteria : on the one hand, according to the sector (e.g “health care, transport, energy and certain parts of the public sector”) and, on the other hand, according to the use itself. Indeed, the Commission points out that a high risk sector can be given to applications that do not carry any risk such as “hospital appointment scheduling systems”(6).

The European Commission states that in high risk areas, AI systems should be transparent, traceable and ensure human oversight. In addition, authorities should be able to test and certify the data used by the algorithms. Unbiased data is needed to train high-risk systems to work properly and to ensure that fundamental rights are respected. For low risk AI applications, the European Commission is considering a non-mandatory labelling scheme if they were to apply higher standards. The White Paper recommends that the European market should be open to all AI applications as long as they comply with EU rules. This White Paper served as a basis for the European Commission’s April 2021 proposal for a regulation on artificial intelligence.

2. A general framework through the proposed regulation on artificial intelligence legislation

The European Commission chose a horizontal framework that addresses shared issues relevant to all forms of artificial intelligence use. Indeed, the European Commission unveiled in April 2021 the first legal framework on AI within a European regulation proposal laying down harmonised rules on artificial intelligence. This proposal is the so-called “Regulation of the European Parliament and of the Council laying down harmonised rules on artificial intelligence (artificial intelligence act) and amending certain union legislative acts”(7). The text’s objectives, which were subjected to a public consultation until August 2021, are to entrench a European vision of AI based on ethics by preventing risks inherent to these technologies with a common regulation. This common regulation would in turn facilitate the framing of the various sectors of application of artificial intelligence while avoiding certain abuses.

It is relevant to highlight that the European Data Protection Board and Supervisor published a joint opinion on June 18th 2021 about the text(8). In this opinion, the Board acknowledges the effort put into the protection and definition of AI rules, but also issues, reservations and concerns on some provisions. In substance, the joint opinion calls for the AI act to have stricter and more protective rules as well as a better consideration of the General Data Protection Regulation.

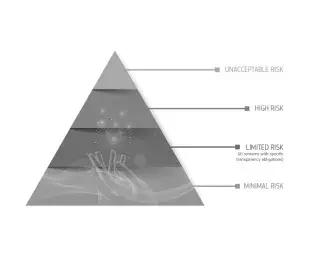

A risks-based approach

The European Commission puts forward a risks-based approach which includes four levels of risks :

- Risks regarded as unacceptable concern a very limited group of AI uses that are incompatible with UE’s values because they jeopardise fundamental rights and thus will be prohibited. In this group, we can, for example, find the social ratings by governments, children’s vulnerability exploitation, the use of subliminal techniques and - subject to strictly limited exceptions - the use by law enforcement of real-time and remote biometric identification systems in public spaces.

- Risks regarded as high are limitatively defined in the proposal because of their negative impact on individuals' security or on their fundamental rights as protected by the European Union’s Charter of Fundamental Rights. This list will stand the test of time because it can be updated in order to take evolutions in AI uses into account.

- These systems notably include safety components of products or systems which are covered by the Union’s sectoral legislation. They will always be considered as high risks as long as, in the context of the sectoral legislation, they are subject to a third party compliance evaluation.

- In order to promote trust and guarantee a high and uniformed level of protection of safety and fundamental rights, some mandatory requirements are proposed for all high risk AI systems. These requirements focus on the quality of used data sets; technical documentation and record-keeping; transparency and information given to users; human oversight, as well as robustness, accuracy and cybersecurity. Thanks to these requirements, national authorities will be able, in case of violation, to get the necessary information to determine if the AI system has been used in compliance with the legislation.

- The proposed framework complies with the EU’s Charter of Fundamental Rights and is compatible with the EU’s international trade commitments.

- Risks presented as low are subjected to specific transparency obligations. For example when there is a high risk of manipulation (e.g. due to the use of chatbots). Users must be informed when they are interacting with a machine.

- Finally, minimal risks, covering all other AI systems that can be developed and used on the condition that they respect the current legislation; are not submitted to any other legal obligation. The large majority of AI systems currently used in the EU belong to this category. Suppliers for these systems can willingly choose to obey requirements inherent to trustworthy AIs and to adhere to codes of conduct.

The European Commission proposes, alongside a definition of “high risk”, a method allowing to identify and list high risks AI systems for the application of the legal framework. The goal is for companies and other operators to benefit from legal certainty. The classification of risks is based on the purpose assigned to the AI system, in accordance with the current EU legislation about product safety. This means that this classification depends on the function executed by the AI system, as well as the specific goal in which the system is used for and the modalities of its use. The criteria of the classification includes the AI applications’ level of use and the function assigned to it; the number of people who may be affected by it; the dependance towards the results and the irreversibility of damages, as well as the extent to which the current EU legislation already provides efficient measures to substantially prevent or decrease these risks.

A list identifying some critical areas enables the clarification of the ranking. The list indicates these applications in domains such as biometric identification or categorisation; critical infrastructures; education and training; recruitment and employment; provision of important public or private services, as well as law enforcement; asylum; immigration and the judiciary.

A list of cases that the Commission currently regards as high risks is attached to the proposal. The Commission will ensure that the list of high risk use cases is kept up-to-date by relying on criteria, based on probing elements and expert’s opinions as part of a consultation with stakeholders. Before a supplier can put a high risk AI system on the EU market or in service by any other means, a compliance evaluation has to be made on the system. Suppliers will therefore be able to demonstrate that their system is compliant with mandatory requirements applicable to trustworthy AI (for example, the quality of data; documentation; traceability; transparency; human oversight, accuracy and robustness). The evaluation will have to be reconducted in cases of substantial modification of the system or its functions. For some AI systems, a notified independent organisation will have to take action in the process. AI systems that constitute safety components of products, covered by a sectoral legislation within the EU, will always be regarded as high risk ones as soon as they are subject to a compliance evaluation from a third party under the terms of the said sectoral legislation. Moreover, compliance evaluations undertaken by a third party is always required for biometric identification systems.

High risk AI system suppliers will also have to implement risks and quality management systems in order to guarantee that they respect the new requirements and minimise risks to users and other parties involved, even after a product is put on the market. Market Surveillance Authorities will contribute to product surveillance after high risk AI systems have been introduced to the market by using audits and offering suppliers the possibility of signalling serious incidents or breaches of fundamental rights.

The application of the regulation supervised by national surveillance authorities and the European Artificial Intelligence Board

The European Commission proposes that Member States play a key role in the application and the respect of this regulation. In this regard, each Member State shall appoint one or more national authorities, who will be given the responsibility of watching the application and the implementation of these norms, and for leading market surveillance actions. In order to increase efficiency and guarantee the existence of an official contact point with the public and its counterparts, each Member State shall appoint a national surveillance authority which will also be in charge of representing it in the European Artificial Intelligence Board.

This board will be composed of high level representatives of each national surveillance authority, the European Data Protection Supervisor and the European Commission. Its role will be to ensure that the implementation of the regulation is easy, efficient and is consistently applied across all member states. The Board will formulate recommendations and views towards the European Commission about high risks AI systems and other relevant aspects for the effective and uniform implementation of the new norms. It will also contribute to the development of expertise and play the part of a competencies centre which national authorities will be able to consult. Finally, it will bring its support to the normalisation of activities in this field.

Planned sanctions

As it is a regulation, the text will be of direct application, with an extra-territorial scope affecting many non-European actors. The proposal of the regulation on artificial intelligence legislation foresees, in case of market launch or utilisation of AI systems that do not respect the text requirements, that Member States will have to provide effective, proportionate and deterrent sanctions, including administrative fines. If an infraction is identified, Member States will have to communicate this to the European Commission.

Some levels of proposed fines are :

- Up to 30 million euros or 6% of the annual global turnover on the precedent fiscal year for infractions corresponding to prohibited practices or violation of data requirements;

- Up to 20 million euros or 4% of the annual global turnover on the precedent fiscal year for disregarding of one of the other requirements or obligations provided by the regulation proposal;

- Up to 10 million euros or 2% of the annual global turnover of the preceding fiscal year for inaccurate, incomplete or misleading information transmitted to notified organisations or to competent national authorities in response to a request.

The European Commission will rely on the European Artificial Intelligence Board’s opinion for developing guidelines that will notably aim at harmonising norms and national practices in terms of setting administrative fines. The institutions, organs and agencies of the European Union will lead by example by complying with the norms and potentially being subject to sanctions. Indeed, the European Data Protection Supervisor will have the power to inflict fines on them.

The implementation of a voluntary code of conducts

The Proposal for a Regulation of the European Parliament and of the Council laying down harmonised rules on artificial intelligence, uses compliance mechanisms. Indeed, application providers that are not high risk will be able to guarantee that their AI systems are trustworthy, by elaborating their own voluntary code of conduct or by adhering to a representative association’s code of conduct. For some AI systems, these voluntary codes of conduct will apply in addition to transparency obligations. The European Commission will encourage associations from the sector and other representative organisations to adopt such codes of conducts.

Foreseen calendar

The European Parliament hopes to find its position on the “Proposal for a Regulation of the European Parliament and of the Council” by laying down harmonised rules on artificial intelligence before November 2022. Dragos Tudorache (Renew) and Brando Benifei (S&D) report that nearly 300 amendments have been filed by political groups from the European Parliament on the project report from on the Regulation proposal on artificial intelligence legislation. The secretaries of the Committee on Civil Liberties, Justice and Home Affairs (Libe) and the Committee on the Internal Market and Consumer Protection (Imco) transmitted the amendments on June 14th 2022 to the involved Members of European Parliament’s offices. One of these discussions, concerns were raised in regards to facial recognition and the need for its prohibition or rather legal supervision. As indicated by Renaud Vedel, national AI supervisor, France played an active role in the negotiation of this text during its European Union presidency. Similarly to the General Data Protection Regulation, the text will not be applicable until two years after its adoption.

Planned sectoral legal frameworks

Machinery and equipment

The systemic risks arising from artificial intelligence systems can justify a vertical sectoral approach. Thus, in addition to the proposal for a regulation on artificial intelligence, the European Commission has introduced a proposal for a Regulation on Machinery Products which will replace the Machinery Directive of 17 May 20106 2006/42/EC(9). The aim of the proposal is to ensure that the new generation of machinery and equipment ensures the safety of users and consumers whilst encouraging innovation. The European Commission defines "machinery and equipment" as a wide range of products intended for private or professional use, encompassing robots (cleaning, care, collaborative, industrial); lawnmowers; 3D printers; construction machinery, as well as industrial production lines. This text and the proposal for a Regulation on artificial intelligence are complementary. Indeed, the AI regulation will address the risks associated with AI systems performing safety functions in machinery, while the Regulation on Machinery Products will ensure, where appropriate, that the AI system is safely integrated into the machine so it does not compromise its safety. The European Commission has indicated that the scope, definitions and safety requirements of the new Machinery and Equipment Regulation will be adapted to ensure greater legal certainty and to take the new machinery characteristics into account. Other provisions will also aim to ensure such a high level of safety, as classification rules will be implemented for high-risk machinery. For example, machinery that has been substantially modified will be subject to a conformity assessment.

Platform workers

In addition, some artificial intelligence services use platform workers. Yet,in December 2021, the European Commission also presented a set of measures to improve working conditions in the context of platform working and to promote the sustainable growth of digital work platforms in the European Union(10). In particular, the European Commission has published a Communication setting out the EU's approach and measures on platform labour and a proposal for a Directive on improving working conditions in platform work. The latter provides measures to properly determine the employment status of people working through digital work platforms and establishes new rights for both employees and self-employed workers with regards to algorithmic management.

Further reading:

- Schwartz Julie, « Intelligence artificielle : les prémices d’une réglementation européenne », Cahier de droit de l’entreprise n°5, septembre 2021, p.58-60.

- Schlich Rémy et Ziegler Laura,«Émergence d’un cadre légal harmonisé : de l’éthique à la conformité », Expertises des systèmes d'information n°471, septembre 2021, p. 309-317.

- Petel Antoine, « Publication de l'”Artificial Intelligence Act”: la Commission européenne dévoile sa vision pour encadrer l'intelligence artificielle », Revue Lamy droit de l’immatériel n°183, juillet 2021, p. 36-41.

4. And at an international level?

UNESCO published a Recommendations n the Ethics of Artificial Intelligence(11) in November 2021. Similarly, the Organisation for Economic Co-operation and Development’s (OECD) Principles on Artificial Intelligence promote innovative and trustworthy AI that respects human rights and democratic values. They were adopted in May 2019 by OECD member countries when they approved the Council Recommendation on Artificial Intelligence(12).

(1) COMMUNICATION DE LA COMMISSION AU PARLEMENT EUROPÉEN, AU CONSEIL EUROPÉEN, AU CONSEIL, AU COMITÉ ÉCONOMIQUE ET SOCIAL EUROPÉEN ET AU COMITÉ DES RÉGIONS L'intelligence artificielle pour l'Europe, COM(2018)237, juin 2018, https://ec.europa.eu/transparency/documents-register/detail?ref=COM(2018)237&lang=fr.

(2) GROUPE D’EXPERTS INDÉPENDANTS DE HAUT NIVEAU SUR L’INTELLIGENCE ARTIFICIELLE CONSTITUÉE PAR LA COMMISSION EUROPÉENNE EN JUIN 2018, Lignes directrices en matière d’éthique pour une IA digne de confiance, Publications Office, 2019, https://data.europa.eu/doi/10.2759/54071.

(3) GROUPE D’EXPERTS INDÉPENDANTS DE HAUT NIVEAU SUR L’INTELLIGENCE ARTIFICIELLE CONSTITUÉE PAR LA COMMISSION EUROPÉENNE EN JUIN 2018, op. cit., p. 3.

(4) COMMISSION EUROPÉENNE, LIVRE BLANC Intelligence artificielle - Une approche européenne axée sur l'excellence et la confiance, COM(2020) 65 final, février 2020, https://ec.europa.eu/info/sites/default/files/commission-white-paper-artificial-intelligence-feb2020_fr.pdf.

(5) Livre blanc préc., p. 19.

(6) CRICHTON Cécile, “Publication par la Commission de son Livre blanc sur l’intelligence artificielle”, Dalloz actualités, février 2020, https://www.dalloz-actualite.fr/flash/publication-par-commission-de-son-livre-blanc-sur-l-intelligence-artificielle#.YiDhay3pNQI.

(7) COMMISSION EUROPÉENNE, Proposition de RÈGLEMENT DU PARLEMENT EUROPÉEN ET DU CONSEIL ÉTABLISSANT DES RÈGLES HARMONISÉES CONCERNANT L’INTELLIGENCE ARTIFICIELLE (LÉGISLATION SUR L’INTELLIGENCE ARTIFICIELLE) ET MODIFIANT CERTAINS ACTES LÉGISLATIFS DE L’UNION, COM/2021/206 final, avril 2021.

(8) Avis conjoint 05/2021 de l’EDPB et du CEPD sur la proposition de règlement du Parlement européen et du Conseil établissant des règles harmonisées concernant l’intelligence artificielle (législation sur l’intelligence artificielle), juin 2021, https://edps.europa.eu/system/files/2021-10/2021-06-18-edpb-edps_joint_opinion_ai_regulation_fr.pdf. Pour une synthèse de l’avis : CNIL, Intelligence artificielle : l’avis de la CNIL et de ses homologues sur le futur règlement européen, juillet 2021,https://www.cnil.fr/fr/intelligence-artificielle-lavis-de-la-cnil-et-de-ses-homologues-sur-le-futur-reglement-europeen.

(9) COMMISSION EUROPÉENNE, Proposition de RÈGLEMENT DU PARLEMENT EUROPÉEN ET DU CONSEIL sur les machines et produits connexes, COM(2021) 202 final, avril 2021, https://eur-lex.europa.eu/legal-content/FR/TXT/HTML/?uri=CELEX:52021PC0202&from=EN

(10) COMMISSION EUROPÉENNE, “Propositions de la Commission pour améliorer les conditions de travail des personnes travaillant via une plateforme de travail numérique”, communiqué de presse, décembre 2021, https://ec.europa.eu/commission/presscorner/detail/fr/ip_21_6605.

(11) UNESCO, Recommandation sur l’éthique de l’intelligence artificielle, SHS/BIO/REC-AIETHICS/2021, 2021, 21 p., https://unesdoc.unesco.org/ark:/48223/pf0000380455_fre.

(12) OCDE, page sur l’intelligence artificielle : https://www.oecd.org/fr/numerique/intelligence-artificielle/.